Leveraging accurate location data unlocks powerful opportunities across your business. With precise, comprehensive, and fresh points-of-interest data, you can enrich your datasets for deeper insights, drive hyper-personalized user experiences, and make data-driven decisions. To empower our users, we continuously iterate on our methodology and leverage cutting-edge technology to ensure the most reliable and comprehensive POI data possible.

The Tale of Two Places Systems

Foursquare’s journey in building location data has been transformative. We started as a pioneer in leveraging user-generated content from our consumer apps to create a rich, dynamic database of venues around the world. Through check-ins, venue additions, and suggested edits, users helped capture real-world details that traditional sources often missed. In 2020, we acquired Factual, which built a comprehensive Places database using digital sources and advanced machine learning.

Drawing from our experiences with two Places systems, we developed the Places Engine by integrating the best features of both and applying critical lessons from our earlier efforts. This new engine addresses the challenge of maintaining a real-world-synced Places database, combining user-generated insights and machine learning-driven data integration to deliver more reliable data. We’re excited to share the story on how this innovative technology evolved from these two distinct, but powerful legacy approaches.

Foursquare Legacy Places System: Crowdsourcing the World’s POIs

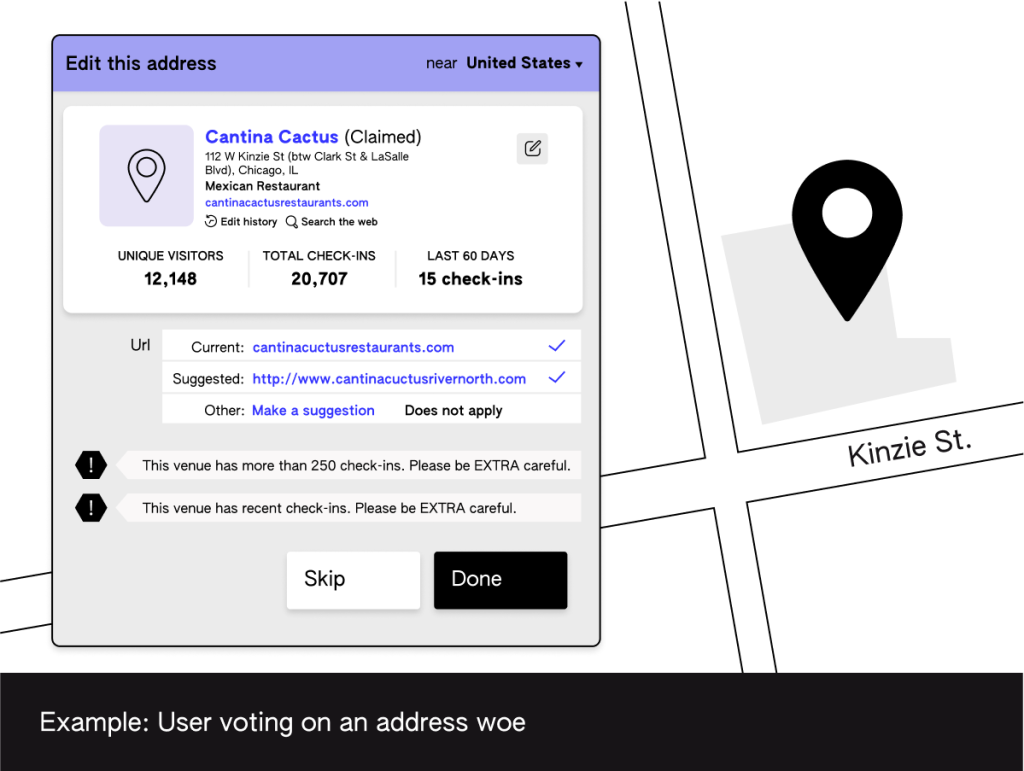

Foursquare’s legacy Places system was built on a foundation of crowd-sourcing, leveraging the power of the active users of our mobile apps to create and maintain a robust database of points of interest (POIs). This system allowed any user to create a new place or suggest edits to existing places, fostering a dynamic and responsive dataset that could quickly adapt to changes in the real world. The edits proposed by users, known as “woes,” were then subject to a unique verification process.

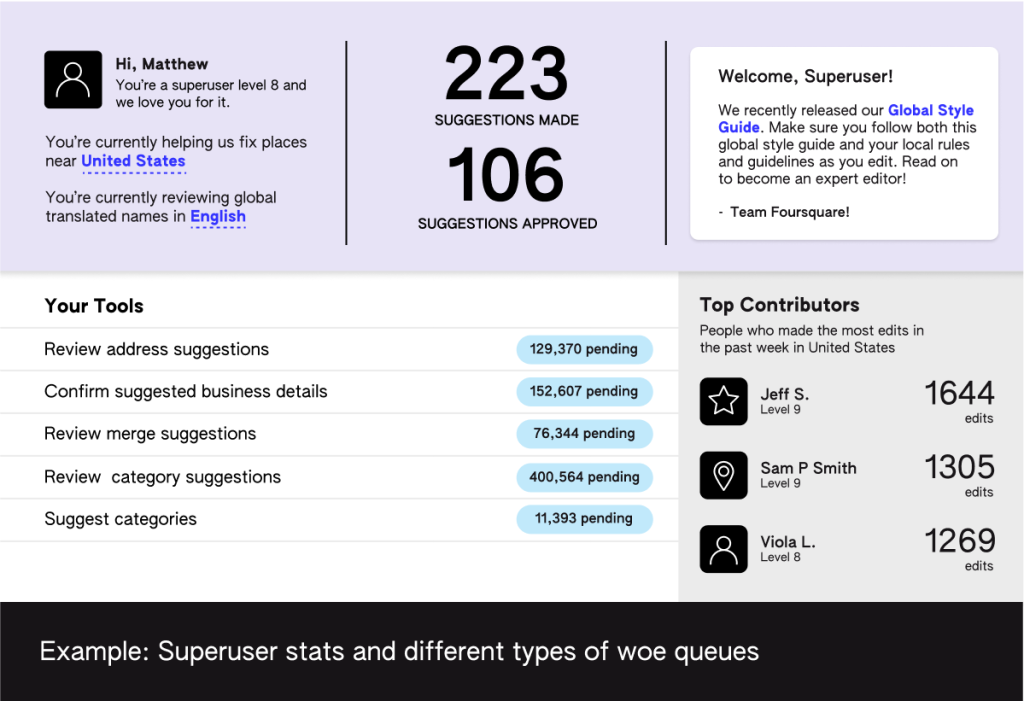

At the heart of this crowd-sourced system was a hierarchy of user roles and permissions. Regular Foursquare users could act as “reporters,” proposing woes to add new places or edits to existing ones. To maintain data quality, the platform also featured a special class of users called “superusers.” These superusers had the ability to both report and “vote” on proposed woes, acting as a human filter to ensure accuracy. This superuser system was further stratified into levels, ranging from 1 to 10, with higher levels granted more authority and responsibility in the woe approval process. These levels depended on the user’s credibility and their track record with past edits.

Each woe had a dynamically computed acceptance threshold. This varied based on factors like a place’s popularity or other contextual rules. Users had dynamic trust scores based on their role and accuracy of past contributions. Reporters earned trust scores by making accurate suggestions, while superusers earned them by approving correct edits and rejecting incorrect ones. These scores were adjusted continuously, rewarding accuracy and penalizing errors.

A woe could be accepted in two ways: either through the combined trust scores of multiple reporters or with the help of superuser votes. This approach enabled quick approval from trusted sources or broad consensus, while contentious changes were reviewed more carefully by superusers. This balance ensured rapid updates while maintaining data integrity, adapting the verification process to the importance of each change.

While effective in capturing nuanced insights, Foursquare’s primary limitation was its reliance on user activity and superuser approvals. This led to a substantial backlog, with millions of unprocessed edits. The backlog delayed valuable updates, affecting the freshness and accuracy of the Places database. Despite efforts from dedicated superusers, they couldn’t keep up with the volume.

Factual Places System: Harnessing Digital Intelligence for POI data

The Factual Places architecture took a sophisticated approach to building and maintaining a comprehensive database of places around the world from digital sources, leveraging the vast amount of information available online and from trusted partners.

The system followed a five-stage process. First, data was ingested from sources like web crawls, syndicators, and partners. Then, the raw data from each source was canonicalized to create summary inputs. Next was the resolve phase, in which inputs were matched to existing entries or added as new locations, ensuring accurate and non-redundant records. The summarization stage combined conflicting data into a single authoritative record, using consensus methods and machine learning models.

Next, the calibration phase assessed each record’s quality, such as accessibility and operational status, using heuristic and machine learning models trained on human-verified annotations. The final filtration stage applied quality checks to determine which records to include, based on factors like completeness, source credibility, and consistency.

Through this multi-stage approach, the Factual system successfully maintained a database that accurately reflected the dynamic nature of real-world locations, leveraging the power of digital sources and automated processes to capture the ever-evolving landscape of place information.

While effective in integrating vast amounts of data from multiple sources, the Factual system’s primary limitation was the lack of human verification in the process. This led to inaccuracies, especially for subtle changes to businesses or locations that might not be immediately reflected in the digital sources. Also, it resulted in underrepresentation of places lacking an online presence, thus creating a bias in the dataset towards digitally visible locations.

Initial Integration

With Foursquare acquiring Factual, we were faced with the opportunity to combine the strengths of these two legacy methodologies, as well as the challenge of addressing each system’s limitations.

Our initial integration strategy was to treat Foursquare as an additional data source in the Factual system. This was chosen in part for efficiency and expediency and was predicated on two primary hypotheses: a) incorporating Foursquare as a data source within the Factual system will address the challenge of capturing places with minimal digital presence, b) Despite a decline in active users, the Foursquare apps continue to generate substantial human verified location data, which will be useful as ground truth in Factual’s calibration models.

Early results & learnings

The preliminary outcomes of this integration were encouraging. We observed a notable expansion in our global location coverage and a marked improvement in the accuracy of our machine learning models, attributable to the integration of Foursquare’s ground truth data. These early successes bolstered our confidence in the integrated approach. However, further analysis revealed several opportunities to address some limitations:

- Lack of continuous human feedback: By treating Foursquare data as merely another input source, we inadvertently undermined the nuanced and granular real-time human verification process that was a cornerstone of Foursquare’s value proposition.

- Insufficient Granular Observability: While we were able to identify quality issues at the aggregate level through enhanced calibration, we lacked the telemetry needed to reason about the correctness of data at an individual record level. It was difficult to understand why a particular place record changed values for specific attributes between releases.

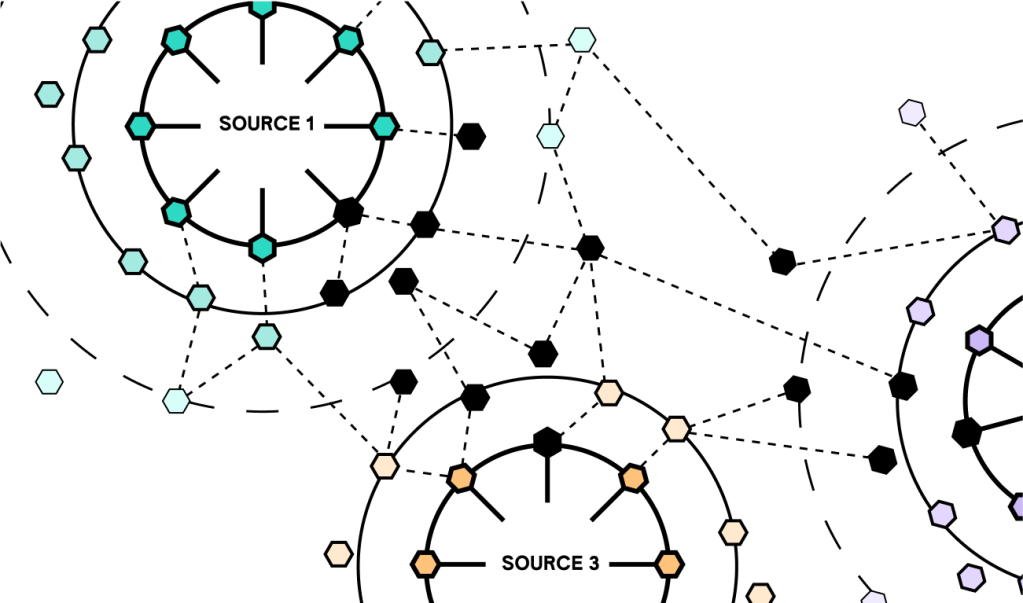

- Limited levers for quality improvements: Our primary method for improving data quality – the addition of new digital sources – became increasingly susceptible to what we termed the “Digital Echo Chamber” effect.

The Digital Echo Chamber effect represents a significant challenge in the location data industry. This phenomenon occurs when location datasets are published online and subsequently used by other companies to create new datasets, often in combination with the original sources. This cycle repeats, with each iteration affecting future datasets. As a result, data provenance becomes unclear, and errors can spread across multiple datasets. This issue is especially concerning with the rise of advanced technologies like Large Language Models (LLMs), which make web crawling for structured information more efficient. As these technologies become more common, the risk of spreading unverified, inaccurate data grows significantly.

Recognizing the need for innovation

The recognition of these challenges led us to a critical juncture in our strategy. We realized that to achieve our goal of creating the most comprehensive and accurate global location dataset, we needed to develop a more novel methodology that balanced the broad reach of digital data paired with the precision & granularity of real-time human verified insights. We had to revisit our approach to integrating these two systems.

Reimagining Places: The Birth of a new engine

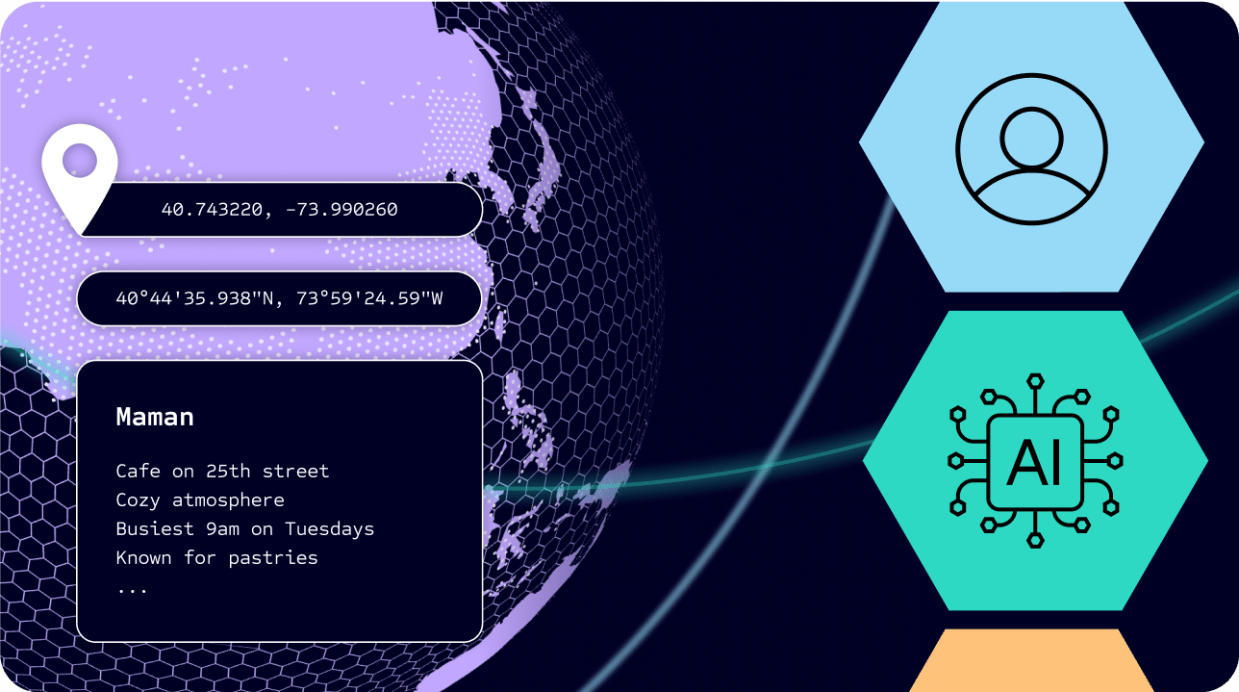

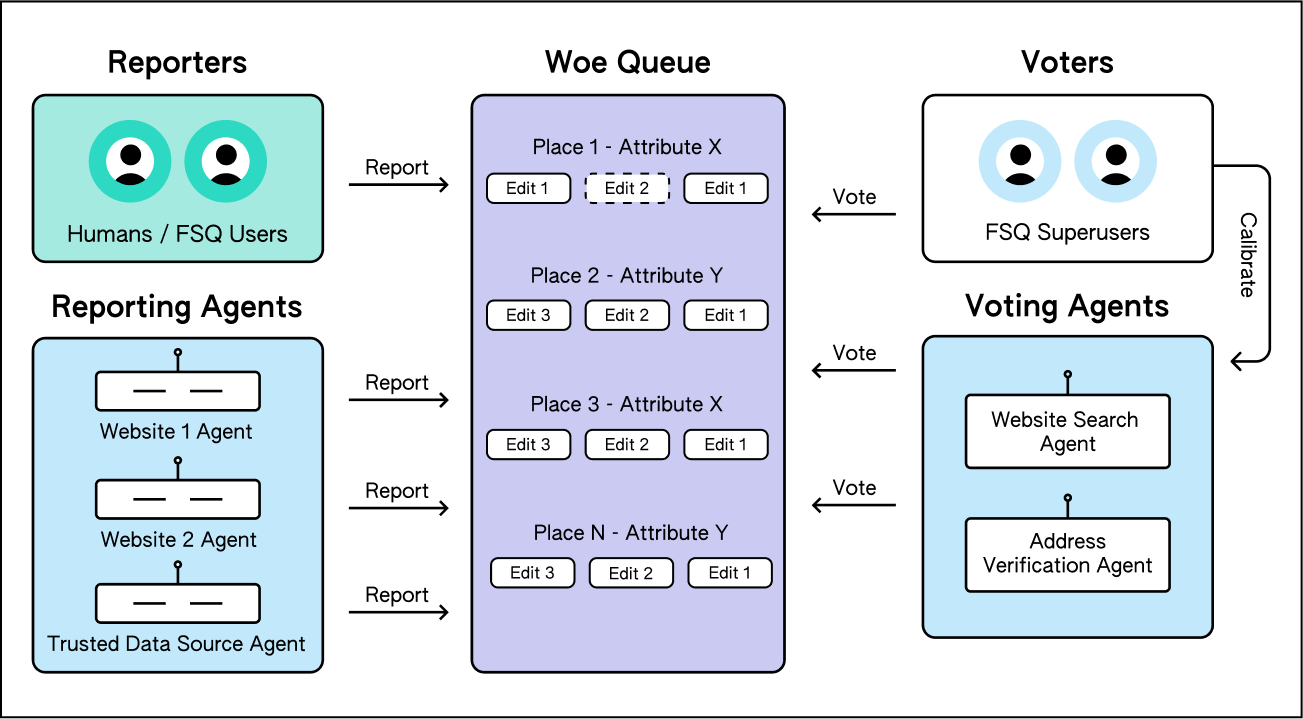

To solve the issues we identified, we developed the Places Engine, following a new model for integrating the two systems. Instead of treating Foursquare as a source in the Factual system, we made the crowd sourcing component of the Foursquare Places system the foundation on top of which this new system is built. We introduced two new kinds of AI-powered agents that mimicked human behavior in this crowd sourced system: Reporting Agents and Voting Agents.

First, we created AI Reporting Agents for each of the digital sources in the Factual system. These agents actively identified changes in the data corresponding to the digital source they represented and compared them to the current authoritative representation of a Place. When a change was detected, they proposed woes just like human users would, ensuring our database stays current with the latest information from all sources.

Second, to complement our human superusers, we’ve introduced AI Voting Agents that mimic superuser behavior. These AI-powered agents vote on woes reported by both human users and Reporter Agents, creating a scalable system that is centered around the benefits of human curation. We created a selection of Voter Agents that employ different strategies on different types of woes. For instance, if a woe is about updating contact info on a Place, a specific kind of voting agent will crawl the website corresponding to that place to ensure that the information in the woe is accurate.

With the new engine, we were able to address the limitations of the early integration approach.

Continuous Human Feedback

With agents and humans working side-by-side assuming different roles, we ensure not only that the humans are regulating other humans but they are also regulating the agent-provided inputs and votes.The AI voting agents took care of most of the non-contentious woes allowing the superusers (human voters) to focus on contentious woes. As a result, the super votes had an amplifying effect on the system.

- When a reporting agent reported bad woes and were rejected by the superusers, the system automatically downgraded the trust score of that agent.

- When a voting agent makes a bad decision and gets caught by a superuser, that serves as a feedback loop for the voting agent to recalibrate its algorithm.

- When two sources are found to be reinforcing each others’ inaccurate woes and get detected by a superuser, the trust scores of both the sources get downgraded.

As a result, we ended up with a self governing system that helped mitigate the ‘digital echo chamber’ effect. Even during the testing phase of this new system, we have had our superuser flag multiple issues with the Agent woes and votes. For instance, woes submitted by an AI agent that published new places with badly formatted addresses were immediately caught by a superuser and rejected.

Additionally, as this dataset integrates directly into our apps, new places created from digital sources get verified organically by Foursquare app users through check-ins, tips, and photo uploads. In some cases, we received photos and checkins on a newly added place within a couple of days.

Granular Observability

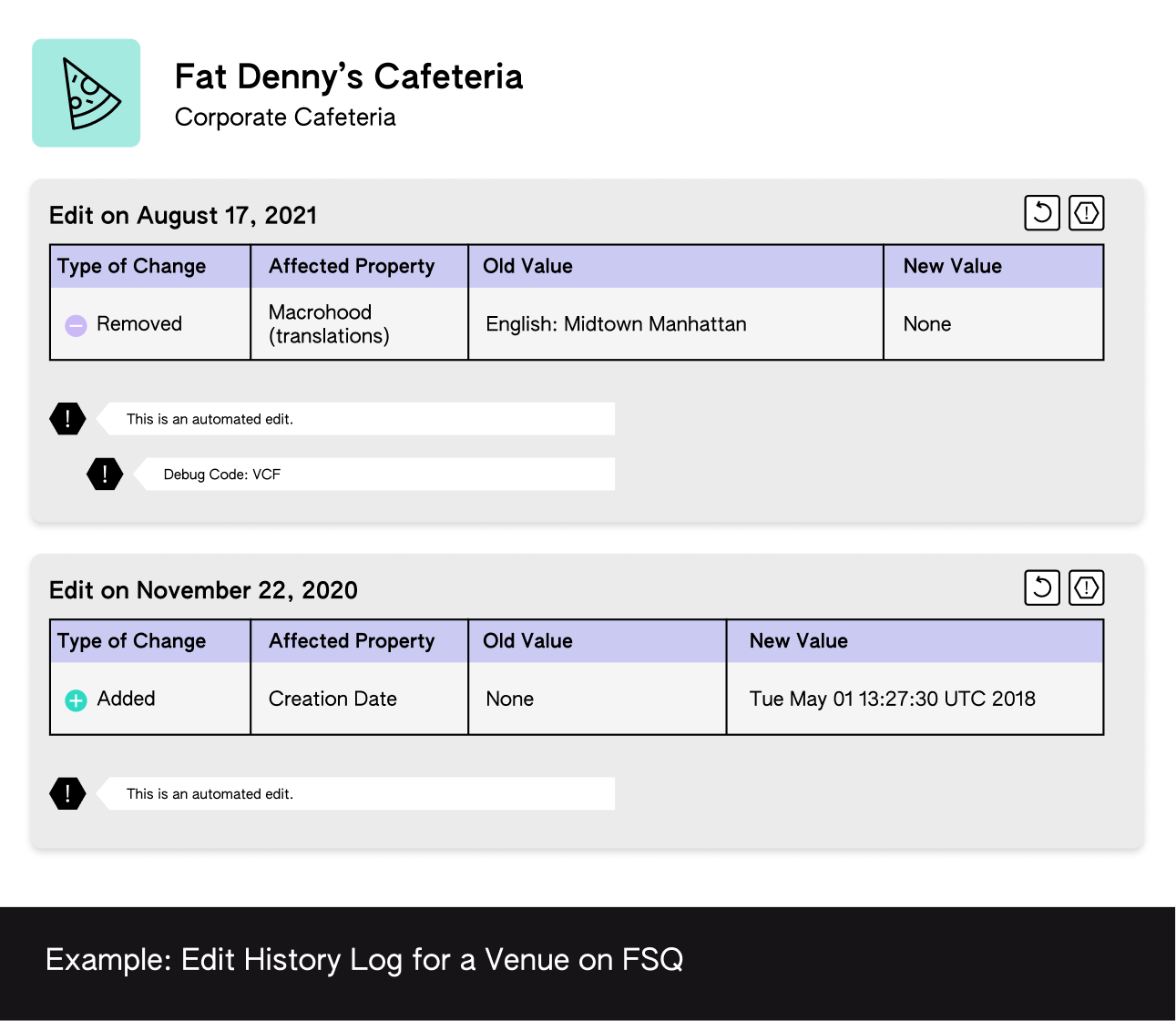

This new system also offered unprecedented observability at a per-Place level. Each Place had an audit log that kept track of the series of accepted woes that led to the latest representation of a Place. In effect, we capture the entire history of edits and the users who proposed those edits. This enabled us to troubleshoot and identify any problematic sources just by looking at a handful of Places that have the wrong values.

Multiple Quality Control Levers

Throughout our experience with various Places systems, we’ve consistently faced a common issue: limited mechanisms to enhance data quality. Our new Places Engine introduces a suite of powerful tools that dramatically enhanced our ability to improve and adapt our POI data continuously:

- Add new AI Voting Agents: We now have the capability to introduce new AI voting agents that use different strategies/algorithms to resolve woes of different types. For instance, we started testing a new voting agent that uses spatial information to vote on woes corresponding to the geographical attributes of a Place.

- Add new digital sources as Reporting Agents: As the system is self-governed with input from superusers, it allows us to add more digital sources without fear of the perpetuating inaccuracies.

- Prioritized Woe Queues (coming soon): We are investing in tools that allow our superusers to provide feedback on the most impactful woes that not only improve the quality of the Places data directly but also indirectly by helping calibrate the voter agents and reporter agents.

- Voter and Reporter APIs (coming soon): A lot of our customers work with human workforces that provide feedback on Places. We are working on providing APIs for our customers to inject their human workforces to help increase the human input into our system.

These enhancements address the limitations we encountered in our early integration efforts, creating a more robust, accurate, and adaptable Places Engine.

Conclusion

The new Foursquare Places Engine represents a groundbreaking approach to Place data management. By carefully blending AI agents into a crowd sourced system alongside humans, we have been able to amplify the effects of human validation of Places data. As we brace for a world where LLM technologies amplify the digital echo chamber effect and make it harder to distinguish between real and synthetic data, our architecture which employs human validation at scale, allows us to maintain a Place database with unprecedented accuracy and freshness, positioning us at the forefront of the location data industry.

For our customers, this unique architecture enables Foursquare to maintain a POI database with unmatched accuracy and freshness, ensuring reliable and up-to-date data. The Places Engine, and consequently the Foursquare Places dataset, help our customers stay ahead of the competition with data they can trust.This innovation positions Foursquare at the forefront of the location data industry, providing the best foundation for businesses to thrive.

All of this innovation is made possible by our loyal superuser community which is a cornerstone of our new engine. We have some exciting announcements to share on how we plan to invest in this community over the next few weeks.

Get in touch to learn more about Foursquare Places

Authored by: Vikram Gundeti

Acknowledgments: Emma Cramer, Anastassia Etropolski, Kevin Sapp, Ali Lewin, Rosalyn Ku, Shamim Samadi, Runxin Li, Danielle Gardner, Byron Radrigan, Stephen Sporki, Evan Vu, Kazuto Nishimori, Ivan Gajic, Mike Spadafora, Miljana Vukoje, Steve Vitali, Xiaowen Huang, James Bogart, Saurav Bose, Srdjan Radojcic, Milos Magdelinic, Nikola Damljanovic, Milena Amidzic, Viktor Kolarov